For many people who work with data on a daily basis, generating and viewing reports are their main data-related activities.

There’s nothing as wholesome as building a nice-looking report with labels and tips on how best to interpret the data, and with toggles and selectors for further refining the queries.

But how did the data in those reports come to be? Who compiled them? Can they be trusted? Was the data collected fairly? Is it representative?

It’s not a hugely overstated estimation to say that most people don’t consider these things. They never stop to consider that there’s a huge and complicated system of interconnected parts generating the data in those reports.

The data needs to be collected, processed, and stored before it can be made available to other processes in the organization.

As someone involved in technical marketing, you don’t need to know every detail of this system. But understanding the lifecycle of a single data point – from collection to activation – is crucial.

Decisions made at every milestone of this lifecycle have cascading consequences on all the subsequent steps and, due to the cyclical nature of many data-related processes, often on the preceding steps, too.

In the following sections, we’ll take a look at how different parts of a prototypical analytics system introduce decisions and friction points that might have a huge impact on the interpretations that can be drawn from the data.

Our exploration begins with the trackerSoftware that typically runs in the user's web browser or device, designed to collect data from the user to a server., a piece of software designed to capture event data from the visitor’s browser or app. The trackerSoftware that typically runs in the user's web browser or device, designed to collect data from the user to a server. compiles this information and dispatches it to the collector, which is the entry point of the analytics server. The collector hands the validated data to a processor, where the information is aligned with a schemaAn analytics system uses schemas to parse, validate, and store events ingested by the collector. The schema dictates what a valid event looks like, what data types are accepted by the system, and what values are required in all incoming events. Schema can also be used to describe the structure of other things, such as the Data Layer., and finally the data is stored for access via queries, integrations, and APIsStrictly speaking application programming interfaces are methods and protocols in a piece of software that allow other sources to communicate with this software. More broadly they are used to describe any functions and methods that operate how the software works..

Deep Dive

Directed vs. cyclical systems

While it’s customary to describe analytics systems as a directed graph from one component to the next, the reality is often more complex.

For example, if the collector detects data quality issues, it might not forward the hit to the next component (processing), but instead store it in a temporary repository where the event waits to be “fixed”. After fixing, it can be fed back to the original pipeline.

Similarly, if at any point of the pipeline the hit is qualified as spam or otherwise unwanted traffic, this information can be returned to the trackerSoftware that typically runs in the user's web browser or device, designed to collect data from the user to a server. software so that hits that match the signature will no longer be collected at all.

Analytics systems tend to have feedback loops where decisions made at the end of the pipeline can inform processes at the beginning.

The tracker runs in the client

The purpose of the trackerSoftware that typically runs in the user's web browser or device, designed to collect data from the user to a server. is to offer a software interface designed to collect and dispatch data to the collector.

Typically, the trackerSoftware that typically runs in the user's web browser or device, designed to collect data from the user to a server. would be a JavaScriptJavaScript is the main language of the dynamic web. The web browser renders the HTML source file into a dynamic document that can be interacted with using JavaScript. libraryAnother word for a file that contains code which can be utilized by downloading the library into the application. For example, when the web browser loads JavaScript files from vendors, those are frequently called libraries. (web analytics) or an SDKSoftware Development Kit. Mobile apps use SDKs to run most of their functionality. SDKs are analogous to JavaScript libraries on web pages. (app analytics) that is downloaded and installed from the vendor’s server into the user’s browser or app.

The trackerSoftware that typically runs in the user's web browser or device, designed to collect data from the user to a server. adds listeners to the web page or the app, designed to collect information autonomously from the user and dispatch it to the collector server.

Example

When you navigate to a web page, the trackerSoftware that typically runs in the user's web browser or device, designed to collect data from the user to a server. activates and detects a page_view event from you. It collects metadataMetadata is additional data about the data itself. For example, in an analytics system the "event" describes that action the user took, and metadata about the event could contain additional information about the user or the event itself. such as the page address, your browser and device details, the marketing campaign that brought you to the page, and even your geographic location, before dispatching the event to the vendor.

When you then scroll down the page a little to read the content below the foldThe "fold" means the visible part of the page when a visitor loads it in the web browser. Items that are "below the fold" usually require the user to scroll to them., a scroll event is detected by the trackerSoftware that typically runs in the user's web browser or device, designed to collect data from the user to a server., and this, too, is dispatched to the collector server. Additional metadataMetadata is additional data about the data itself. For example, in an analytics system the "event" describes that action the user took, and metadata about the event could contain additional information about the user or the event itself. would be just how deep you scrolled (in percentages or pixelsPixels are the smallest units that make up the visible part of a web page. scrolled) and maybe how long you’ve spent on the page.

Once the trackerSoftware that typically runs in the user's web browser or device, designed to collect data from the user to a server. has detected an event and gathered all the data it needs, it is ready to dispatch the event to the vendor.

This would usually be an HTTP network requestHyperText Transfer Protocol is the main communication method of the World Wide Web. When clients send requests to web browsers, they are sent using HTTP. The purpose of the request is to fetch data from the web server. to the collector server, where the data payloadIn an analytics system, the payload is used to describe the data in the request address or the request body, specifically designed to be associated with the analytics system’s schemas at processing time. is included in the request address itself or the request body.

Deep Dive

Autonomous collectors

Because trackersSoftware that typically runs in the user's web browser or device, designed to collect data from the user to a server. are often designed by the analytics system vendors and because they have autonomous access to their execution environment (the web page or the app), they tend to collect more information than is necessary.

Typically, trackersSoftware that typically runs in the user's web browser or device, designed to collect data from the user to a server. collect information such as:

- Unique identifiers that distinguish users from others

- Details about the device, browser, and operating system of the user

- Information about the marketing campaign that initiated the current visit

- MetadataMetadata is additional data about the data itself. For example, in an analytics system the "event" describes that action the user took, and metadata about the event could contain additional information about the user or the event itself. about the page or screen the user is currently viewing

- Additional metadataMetadata is additional data about the data itself. For example, in an analytics system the "event" describes that action the user took, and metadata about the event could contain additional information about the user or the event itself. about the user, often stored in databasesStructured storage for data that usually serves a singular purpose. For example, a company's financial records would be stored in a database. accessible with cookieCookies are a way to persist information on the web from one page to the next and from one browsing session to the next. They are small bits of information always stored on a specific domain, and they can be set to expire (self-delete) after a given amount of time. tokens

Naturally, vendors want as much data as they can collect. But it might surprise their customers just how much detail can be collected using browser and mobile app technologies.

The most dangerous trackersSoftware that typically runs in the user's web browser or device, designed to collect data from the user to a server., from a privacy and data protectionIn the context of regulation, data protection is the process of safeguarding any data relating to an identified or identifiable natural person. point of view, are those that collect a log of everything the user does on a page and that scrapeSome tracker libraries can be configured to scrape information from the page. This means that they use JavaScript to access information on the page rather than going through, for example, a Data Layer. Scraping can be hazardous if done autonomically, because analytics systems can end up inadvertently scraping credit card numbers of other sensitive data. sensitive data (such as email addresses) from the page automatically. These practices run afoul of many data protectionIn the context of regulation, data protection is the process of safeguarding any data relating to an identified or identifiable natural person. laws.

The collector validates and pre-processes

In general terms, the collector is a server. It’s designed to collect the network requests from trackersSoftware that typically runs in the user's web browser or device, designed to collect data from the user to a server., and it’s often the entry point into a larger server-side data pipeline for handling that data.

What the collector does immediately after receiving the network requestHyperText Transfer Protocol is the main communication method of the World Wide Web. When clients send requests to web browsers, they are sent using HTTP. The purpose of the request is to fetch data from the web server. really depends on the analytics system and what role should validation and preprocessing have in the overall pipeline.

In some cases, the collector can be very simple. It writes all incoming requests to log storageIn networking, log storage contains an entry for each network request received by the server. The log entry typically includes metadata such as the request URL, the origin of the request, the timestamp of the request, and the IP address of the request source., from where analytics tools can then parse the information.

But this would not be a very efficient analytics system. It would just be a glorified logging tool, and analysts working in the organization would not be very grateful for having to parse thousands of log entries just to glean some insights from the dataset.

Instead, collectors typically do initial distribution, pre-processing, and validation of the data based on the request footprint alone. For example:

- A request that has ad signals in the URLUniversal Resource Locator, the main method of encoding internet addresses for web browsers to send requests to., for example click identifiers or campaign parameters, could be forwarded to a different processing unit than one that doesn’t.

- A request that originates in the European Economic Area could be forwarded to a more GDPR-friendly pipeline than a request that originated elsewhere.

- A request that seems to come from a virtual machine environment could be flagged as spam, as it could have been generated by automated bot software.

While it’s not wise to have the collector perform too much pre-processing, as that introduces unnecessary latencyAnother word for delay. The higher the latency, the longer the delay between the action and the consequence. to the pipeline, mitigating spam traffic and complying with regional data protectionIn the context of regulation, data protection is the process of safeguarding any data relating to an identified or identifiable natural person. regulations might be necessary to introduce already at this stage and sometimes even earlier, in the trackerSoftware that typically runs in the user's web browser or device, designed to collect data from the user to a server. software itself.

Once the collector is satisfied that the request has been adequately validated, it is forwarded to processing.

Ready for a quick break?

Now’s a good time to take a small break – walk around for 5 minutes and have a glass of water. 😊

The processor associates the data with a schema

The processing of the data is a complicated activity.

An analytics system makes use of schemasAn analytics system uses schemas to parse, validate, and store events ingested by the collector. The schema dictates what a valid event looks like, what data types are accepted by the system, and what values are required in all incoming events. Schema can also be used to describe the structure of other things, such as the Data Layer. to align the data parsed from the collector into the format required by the different parts of the analytics system.

The schemaAn analytics system uses schemas to parse, validate, and store events ingested by the collector. The schema dictates what a valid event looks like, what data types are accepted by the system, and what values are required in all incoming events. Schema can also be used to describe the structure of other things, such as the Data Layer. is essentially a blueprint that determines the structure and utility of the collected data.

At processing time, data enrichment can also happen. For example, the user’s IP addressAn Internet Protocol address is attached to every single machine connected to a network, including the public internet. could be used to add geolocationThe act of geographically locating the user on the globe. In the context of an analytics system, geolocation is usually done with a reverse lookup of the user's IP address, as all IP addresses have a geolocation associated with them. signals to the data, the user properties sent with the hit can be scoped to all hits from the user, and monetary values could be automatically converted between currencies.

Data processing is arguably the most significant part of any analytics system, and it’s what causes the biggest differentiation between different analytics products.

When a vendor decides upon a schemaAn analytics system uses schemas to parse, validate, and store events ingested by the collector. The schema dictates what a valid event looks like, what data types are accepted by the system, and what values are required in all incoming events. Schema can also be used to describe the structure of other things, such as the Data Layer., they are making a decision for all their users on how to interpret the data. Even though many analytics systems offer ways of modifying these schemasAn analytics system uses schemas to parse, validate, and store events ingested by the collector. The schema dictates what a valid event looks like, what data types are accepted by the system, and what values are required in all incoming events. Schema can also be used to describe the structure of other things, such as the Data Layer. or exporting “raw” data where the schemaAn analytics system uses schemas to parse, validate, and store events ingested by the collector. The schema dictates what a valid event looks like, what data types are accepted by the system, and what values are required in all incoming events. Schema can also be used to describe the structure of other things, such as the Data Layer. is only minimally applied, most tool users will likely rely on the default settings which might color their analyses a great deal.

For this reason, if you want to use an analytics system efficiently, you need to familiarize yourself with the various schemasAn analytics system uses schemas to parse, validate, and store events ingested by the collector. The schema dictates what a valid event looks like, what data types are accepted by the system, and what values are required in all incoming events. Schema can also be used to describe the structure of other things, such as the Data Layer. it uses.

Deep Dive

The schema establishes the grammar of the data pipeline

The schemaAn analytics system uses schemas to parse, validate, and store events ingested by the collector. The schema dictates what a valid event looks like, what data types are accepted by the system, and what values are required in all incoming events. Schema can also be used to describe the structure of other things, such as the Data Layer. issues instructions for translating the events and hits into the semantic structure required by the overall data pipeline. The schemaAn analytics system uses schemas to parse, validate, and store events ingested by the collector. The schema dictates what a valid event looks like, what data types are accepted by the system, and what values are required in all incoming events. Schema can also be used to describe the structure of other things, such as the Data Layer. is not just descriptiveA descriptive schema is built so that the data is structured to suit the needs and requirements of the company managing the website rather than the marketing vendors the company works with. – it can also include validation criteria for whether fields are required or optional, and what types of values they should have.

Often, the schemaAn analytics system uses schemas to parse, validate, and store events ingested by the collector. The schema dictates what a valid event looks like, what data types are accepted by the system, and what values are required in all incoming events. Schema can also be used to describe the structure of other things, such as the Data Layer. instructs how the data should be stored. In data warehousesData warehouse is a repository of data collected by an organization from different sources. The data can then be transformed within the data warehouse before being made available for querying against., for example, the schemaAn analytics system uses schemas to parse, validate, and store events ingested by the collector. The schema dictates what a valid event looks like, what data types are accepted by the system, and what values are required in all incoming events. Schema can also be used to describe the structure of other things, such as the Data Layer. is applied at storage time. Thus the schemaAn analytics system uses schemas to parse, validate, and store events ingested by the collector. The schema dictates what a valid event looks like, what data types are accepted by the system, and what values are required in all incoming events. Schema can also be used to describe the structure of other things, such as the Data Layer. dictates how the data can ultimately be queried.

For example, let’s imagine this simple data payloadIn an analytics system, the payload is used to describe the data in the request address or the request body, specifically designed to be associated with the analytics system’s schemas at processing time. in the request URLUniversal Resource Locator, the main method of encoding internet addresses for web browsers to send requests to.:

&cid=12345&et=1694157514167&up.status=loyal

Here we have a payloadIn an analytics system, the payload is used to describe the data in the request address or the request body, specifically designed to be associated with the analytics system’s schemas at processing time. of three key-value pairsKey-value pair is a generic term used to describe the contents of an object. An object is a data structure that can have zero or more key-value pairs. Each object can have just one of any given key, and the key always needs to point to some value.. The schemaAn analytics system uses schemas to parse, validate, and store events ingested by the collector. The schema dictates what a valid event looks like, what data types are accepted by the system, and what values are required in all incoming events. Schema can also be used to describe the structure of other things, such as the Data Layer. could, then, instruct the following:

| Parameter name | Value | Column name | Required |

|---|---|---|---|

cid | Numeric | client_id | True |

et | Timestamp (Unix timeComputer time is often measured in Unix time. It's a standardized format, describing the number of seconds (or milliseconds, or microseconds, or even nanoseconds) since 00:00:00 (UTC), January 1st, 1970.) | event_timestamp | False |

up.status | Alphanumeric | user_properties.status | False |

... | … | … | … |

If the schemaAn analytics system uses schemas to parse, validate, and store events ingested by the collector. The schema dictates what a valid event looks like, what data types are accepted by the system, and what values are required in all incoming events. Schema can also be used to describe the structure of other things, such as the Data Layer. finds a mismatch, for example a missing cid parameter or an et parameter that is not a valid timestamp, it could flag the event as malformed.

Storage makes the data available for queries

Storage sounds simple enough. It’s a data warehouseData warehouse is a repository of data collected by an organization from different sources. The data can then be transformed within the data warehouse before being made available for querying against., where the logged and processed event data is available for queries.

Example

When you open your analytics tool of choice and look at a report, the data in that report is stored somewhere with the specific purpose of populating the report you are looking at.

If you then open a real-timeReal-time analysis refers to analysis of data that is currently being collected. For example, a publishing media might use real-time data to see how many people are consuming content at any given time. "Real-time" is never really real-time – there's always a latency of at least some milliseconds, usually seconds or even minutes. report that shows you data as it’s collected by the vendor server, the data in that report most likely comes from a different storage location, designed to make the data available for the type of streaming access that real-timeReal-time analysis refers to analysis of data that is currently being collected. For example, a publishing media might use real-time data to see how many people are consuming content at any given time. "Real-time" is never really real-time – there's always a latency of at least some milliseconds, usually seconds or even minutes. reports necessitate.

Storage is more than just dumping files in a drive somewhere in the cloud. Analytics systems need to take vast precautions to appropriately encryptWhen information is encrypted, it is obfuscated in such a way that no one without the encryption key should be able to determine what the data actually comprises. and protect storage so that data ownership and governance clauses are respected. The data needs to be protected against data breachesA security incident that results in unauthorized access to confidential information., too, to avoid leaking potentially sensitive information to malicious attackers.

Additionally, data protectionIn the context of regulation, data protection is the process of safeguarding any data relating to an identified or identifiable natural person. regulations might dictate conditions for how and where the data needs to be stored, and how it can be accessed to comply with various types of data subjectAn identified or identifiable natural person whose personal data is processed by a controller or processor. access requests, for example when the user wants to delete all their personal data from what the analytics system has collected.

Finally, storage is about utility. The data needs to be stored for some purpose. An important purpose in the data and analytics world is reporting and analysis.

When you use the reporting interface of an analytics tool, or when you use a connector with Google Looker Studio or Tableau, you’re actually looking at the end product of a storage query.

These connected systems utilize integrations and APIsStrictly speaking application programming interfaces are methods and protocols in a piece of software that allow other sources to communicate with this software. More broadly they are used to describe any functions and methods that operate how the software works. that allow them to pull data from storage for displaying in a visually appealing format.

Deep Dive

Different storage models for different purposes

An analytics system could distribute data to storage in many different ways, depending on what types of interfaces it offers for accessing the data. For example:

- HTTP log storageIn networking, log storage contains an entry for each network request received by the server. The log entry typically includes metadata such as the request URL, the origin of the request, the timestamp of the request, and the IP address of the request source. from the collector for ingestion-time analysis

- Pre-processed data for real-timeReal-time analysis refers to analysis of data that is currently being collected. For example, a publishing media might use real-time data to see how many people are consuming content at any given time. "Real-time" is never really real-time – there's always a latency of at least some milliseconds, usually seconds or even minutes. analysis

- Short-term storage for daily data (small query size)

- Long-term storage for historical data (larger query size)

- Interim storage for hits that need extra processing before they are stored, for example hits that didn’t pass initial validation tests

Even though storage is usually inexpensive, querying that storage isn’t. That’s why large analytics systems typically optimize storage access by using machine learning to return a representative sampleSome analytics tools respond to queries with representative samples of the data rather than all the data that matches the query. It's often faster for the tool to generate a sample than to fetch all the available data. The sample is considered representative when it can be generalized to the entire dataset, within a certain threshold of accuracy. For example, a representative sample might indicate that there were ~990 purchases yesterday, when in fact the real number was 997. of the data rather than the full query result.

By paying a bit more, you can have access to the unsampled datasets, with the caveat that compiling the reports for this data will take longer than when working with samples.

Reports and integrations put the data to use

For many end users, reporting is the main use case for an analytics system.

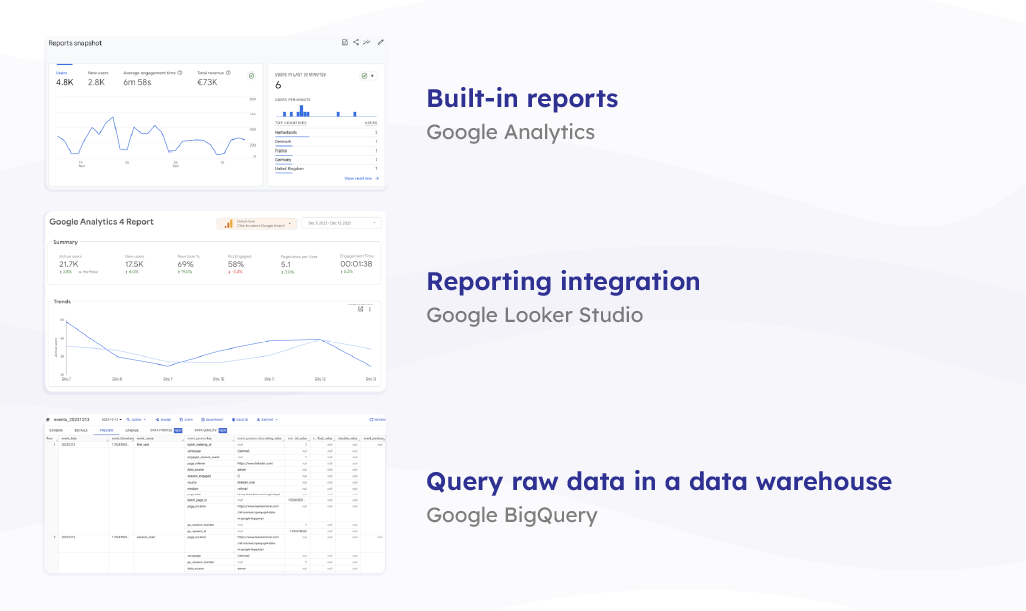

Some analytics tools offer a built-in reporting suite, which has privileged access to storage for displaying the data in predetermined ways.

However, these days it’s very common to use tools specifically designed for reporting, such as Google Looker Studio, Tableau, and PowerBI, together with the data in the analytics system.

These external reporting tools need to be authenticated for access to the storage in the analytics system. They then compile queries against this storage, and then display the data in graphs, charts, and other reporting user interface components.

To save on costs and computation, the analytics system might not make the entire storage capacity available for queries, instead exposing just a layer for these integrations.

For this reason, analytics systems might also offer a way to write the raw data directly into an external data warehouseData warehouse is a repository of data collected by an organization from different sources. The data can then be transformed within the data warehouse before being made available for querying against. so that users can build their own query systems without having to worry about the limitations and restrictions of proprietary storage access.

Broadly speaking, the three types of access described in this section could be categorized as follows:

- Reports in the analytics system itself are useful for quick ad hoc analysisType of exploratory analysis where the data is queried to answer one-off questions. This is in contrast to a more systematic analysis where popular questions are stored as queries that can be asked again and again as the dataset changes. with access to the full, processed data set. These reports might be subject to limitations and restrictions for data access, and the types of reports that can be built are predetermined by the analytics system itself.

- Reports in an external tool allow you to choose how to build the visualizations yourself. This is useful if you want to use software that your organization is already familiar with, and if you want to keep access to these reports separate from access to the analytics system.

- Reports against an external data warehouseData warehouse is a repository of data collected by an organization from different sources. The data can then be transformed within the data warehouse before being made available for querying against. are the most powerful way of taking control over reporting. This approach also requires the most work and most know-how, because to be able to query the data in the data warehouseData warehouse is a repository of data collected by an organization from different sources. The data can then be transformed within the data warehouse before being made available for querying against. you need to be familiar with the schemaAn analytics system uses schemas to parse, validate, and store events ingested by the collector. The schema dictates what a valid event looks like, what data types are accepted by the system, and what values are required in all incoming events. Schema can also be used to describe the structure of other things, such as the Data Layer. of the analytics system and the schemaAn analytics system uses schemas to parse, validate, and store events ingested by the collector. The schema dictates what a valid event looks like, what data types are accepted by the system, and what values are required in all incoming events. Schema can also be used to describe the structure of other things, such as the Data Layer. of your own data store. This is often also the most costly option because you will need to field the costs of storage and querying for the data warehouseData warehouse is a repository of data collected by an organization from different sources. The data can then be transformed within the data warehouse before being made available for querying against..

As a technical marketer, the better you understand the journey of a single data point collected by a trackerSoftware that typically runs in the user's web browser or device, designed to collect data from the user to a server., pre-processed by a collector, aligned against a schemaAn analytics system uses schemas to parse, validate, and store events ingested by the collector. The schema dictates what a valid event looks like, what data types are accepted by the system, and what values are required in all incoming events. Schema can also be used to describe the structure of other things, such as the Data Layer. by the processor, deposited in storage, and made available for reporting and integrations, the better will you be able to organize the data generated by your organization.

Key takeaway #1: Data flows through the pipeline

The combination of data infrastructureThe physical components, services, and mechanisms that service an organization's data practices. and data architectureHow data is structured, stored, and utilized within an organization. It's a collection of data models, schemas, data flow maps, and governance instructions. for an analytics system is called the “data pipeline”. It usually comprises a trackerSoftware that typically runs in the user's web browser or device, designed to collect data from the user to a server. for collecting information that needs to be tracked, a collector for pre-processing and validating the tracker’s work, a processor for adjusting the data to match a specific schemaAn analytics system uses schemas to parse, validate, and store events ingested by the collector. The schema dictates what a valid event looks like, what data types are accepted by the system, and what values are required in all incoming events. Schema can also be used to describe the structure of other things, such as the Data Layer., and storage for storing the data. There can be many other components in the pipeline, but on a general level these four are what you’d typically encounter.

Key takeaway #2: Schema is the backbone of a data model

The schemaAn analytics system uses schemas to parse, validate, and store events ingested by the collector. The schema dictates what a valid event looks like, what data types are accepted by the system, and what values are required in all incoming events. Schema can also be used to describe the structure of other things, such as the Data Layer. is a blueprint that determines the structure and utility of the collected data. It’s what turns the parameter-based data from the trackerSoftware that typically runs in the user's web browser or device, designed to collect data from the user to a server. into units that resemble each other in type, form, and function. The schemaAn analytics system uses schemas to parse, validate, and store events ingested by the collector. The schema dictates what a valid event looks like, what data types are accepted by the system, and what values are required in all incoming events. Schema can also be used to describe the structure of other things, such as the Data Layer. can be used to validate the incoming data, so that events that miss required values are flagged as problematic to be discarded or fixed at a later time. The schemaAn analytics system uses schemas to parse, validate, and store events ingested by the collector. The schema dictates what a valid event looks like, what data types are accepted by the system, and what values are required in all incoming events. Schema can also be used to describe the structure of other things, such as the Data Layer. also instructs how the data is stored for later use in queries and integrations.

Key takeaway #3: Activation is the most difficult part of the pipeline

Activation, or how the data is actually utilized, is difficult to encode into the pipeline. That’s because it’s dependent on the business questions, integrated tools, skills of the people who query the data, and the context of how the data is intended to be utilized. Nevertheless, reports and integrations need to be built to support the existing use cases as well as possible future use cases that haven’t yet been thought of.